# 1.搭建ElasticSearch

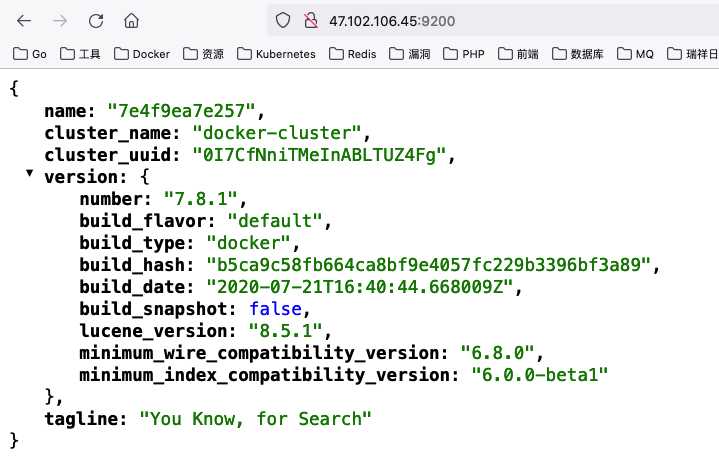

docker pull elasticsearch:7.8.1

docker run -itd -e ES_JAVA_POTS="-Xms512m -Xmx512m" -e "discovery.type=single-node" -p 9200:9200 -p 9300:9300 --name es7.8.1 elasticsearch:7.8.1

# 2.搭建Kibana

docker pull kibana:7.7.1

docker run --link es7.8.1:elasticsearch -p 5601:5601 -d --name kibana7.7.1 kibana:7.7.1

这里需要配置kibana.yml,不然kibana默认通过localhost是找不到ES的。

进入容器命令行模式

docker exec -it kibana7.7.1 /bin/bash

修改kibana.yml文件

vi config/kibana.yml

vi config/kibana.yml

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

2

3

4

5

这里主要添加两个一个es的地址,http://xxx:9200 (opens new window),xxx就是刚刚link起的别名,另外就是‘i18n.locale’语言配置,kibana默认是英文界面,修改外为zh-CN就可以汉化。

修改并保存完配置文件后,先ping 一下 刚刚改的域名,如果ping成功的话,说明两个容器已经可以互相通信了。

重启kibana容器

docker restart kibana7.7.1

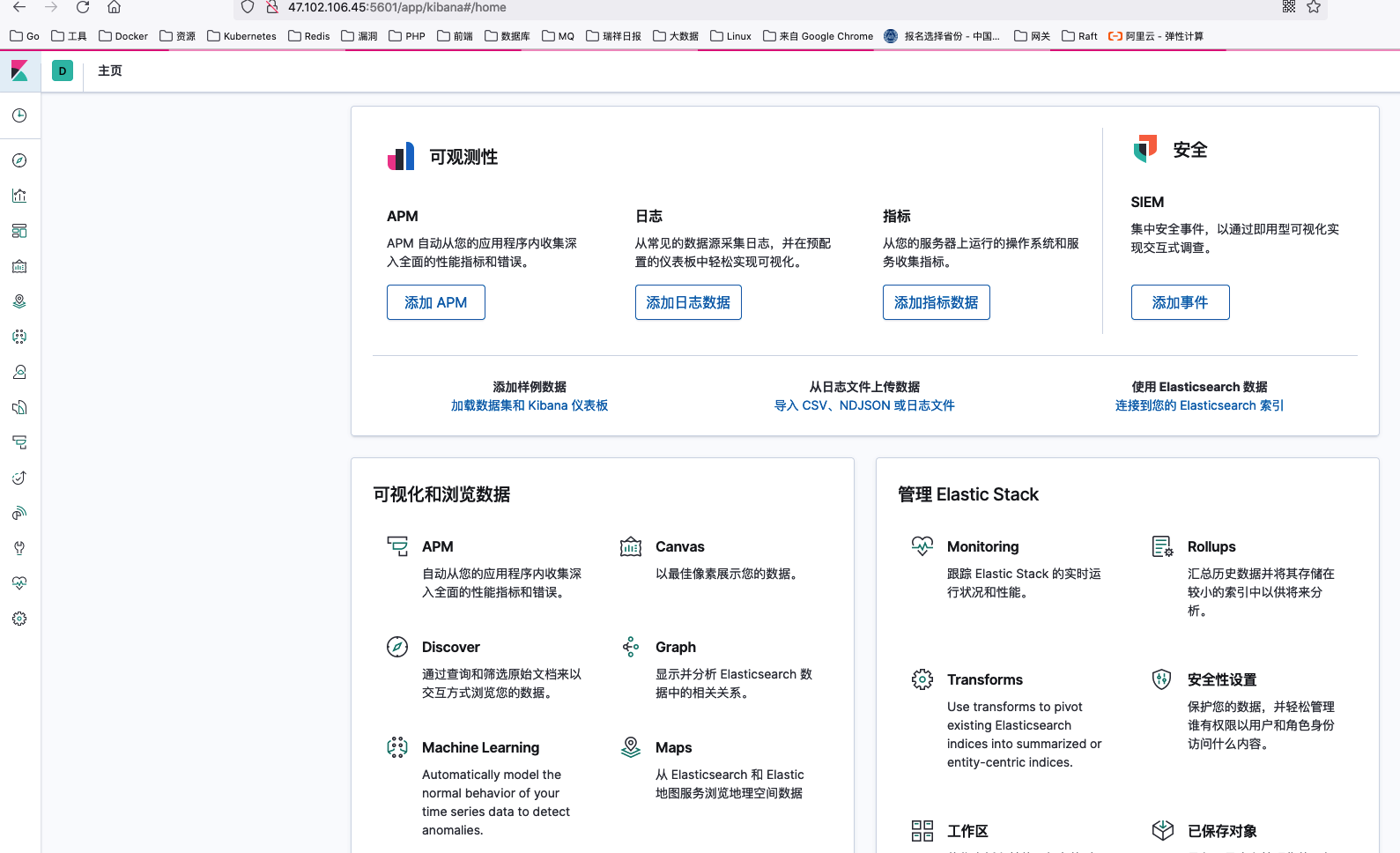

访问 http://localhost:5601/ (opens new window) 如果成功进入界面,就说明启动成功了。

# 3.搭建FileBeat

docker pull elastic/filebeat:7.7.1

拉取完成之后,先不着急启动,在启动之前需要完成先建立一份映射的配置文件filebeat.docker.yml,选择目录创建filebeat.docker.yml

#=========================== Filebeat inputs ==============

filebeat.inputs:

- type: log

enabled: true

##配置你要收集的日志目录,可以配置多个目录

paths:

- /usr/share/filebeat/logs/*.log

- /usr/share/filebeat/logs/SyncWorkUserProcess/*.log

- /usr/share/filebeat/logs/SyncWorkUserCrontab/*.log

- /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/*.log

- /usr/share/filebeat/logs/SyncUserDayBalanceProcess/*.log

- /usr/share/filebeat/logs/CardPay/*.log

##配置多行日志合并规则,已时间为准,一个时间发生的日志为一个事件

multiline.pattern: '^\d{4}-\d{2}-\d{2}'

multiline.negate: true

multiline.match: after

## 设置kibana的地址,开始filebeat的可视化

setup.kibana.host: "http://kibana:5601"

setup.dashboards.enabled: true

#-------------------------- Elasticsearch output ---------

output.elasticsearch:

hosts: ["http://172.28.191.155:9200"]

index: "filebeat-%{+yyyy.MM.dd}"

setup.template.name: "my-log"

setup.template.pattern: "my-log-*"

json.keys_under_root: false

json.overwrite_keys: true

##设置解析json格式日志的规则

#processors:

#- decode_json_fields:

# fields: [""]

# target: json

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

为什么不直接去filbeat容器里面去改配置文件呢?因为filebeat容器的配置文件是只读的不可更改,所以只能通过映射配置文件的方式修改。

建立好配置文件之后,启动filebeat容器

docker run -d -v /root/filebeat.docker.yml:/usr/share/filebeat/filebeat.yml -v /root/Log:/usr/share/filebeat/logs --link es7.8.1:elasticsearch --link kibana7.7.1:kibana --name filebeat7.7.1 elastic/filebeat:7.7.1

这里 -v 就是挂在目录的意思就是将自己本地的目录挂载到容器当中,第一个挂载映射的是配置文件,第二个是要收集的日志目录,如果不挂载日志目录的话,filebeat是不会收集日志的,因为在容器里面根本找不到要收集的路径。

启动之后通过docker logs 查看日志

[root@8U32G-gan ~]# docker logs -f filebeat7.7.1

2022-02-15T07:14:56.817Z INFO instance/beat.go:621 Home path: [/usr/share/filebeat] Config path: [/usr/share/filebeat] Data path: [/usr/share/filebeat/data] Logs path: [/usr/share/filebeat/logs]

2022-02-15T07:14:56.820Z INFO instance/beat.go:629 Beat ID: f8dbd4b2-063f-417b-93b3-cfea9f821982

2022-02-15T07:14:56.820Z INFO [seccomp] seccomp/seccomp.go:124 Syscall filter successfully installed

2022-02-15T07:14:56.820Z INFO [beat] instance/beat.go:957 Beat info {"system_info": {"beat": {"path": {"config": "/usr/share/filebeat", "data": "/usr/share/filebeat/data", "home": "/usr/share/filebeat", "logs": "/usr/share/filebeat/logs"}, "type": "filebeat", "uuid": "f8dbd4b2-063f-417b-93b3-cfea9f821982"}}}

2022-02-15T07:14:56.820Z INFO [beat] instance/beat.go:966 Build info {"system_info": {"build": {"commit": "932b273e8940575e15f10390882be205bad29e1f", "libbeat": "7.7.1", "time": "2020-05-28T15:24:11.000Z", "version": "7.7.1"}}}

2022-02-15T07:14:56.820Z INFO [beat] instance/beat.go:969 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":8,"version":"go1.13.9"}}}

2022-02-15T07:14:56.821Z INFO [beat] instance/beat.go:973 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2022-02-15T06:35:45Z","containerized":true,"name":"f103ccdcee98","ip":["127.0.0.1/8","172.17.0.4/16"],"kernel_version":"3.10.0-1127.19.1.el7.x86_64","mac":["02:42:ac:11:00:04"],"os":{"family":"redhat","platform":"centos","name":"CentOS Linux","version":"7 (Core)","major":7,"minor":8,"patch":2003,"codename":"Core"},"timezone":"UTC","timezone_offset_sec":0,"id":"8cc8b83c2903418e8e1fa9d45d4fc3e5"}}}

2022-02-15T07:14:56.821Z INFO [beat] instance/beat.go:1002 Process info {"system_info": {"process": {"capabilities": {"inheritable":["chown","dac_override","fowner","fsetid","kill","setgid","setuid","setpcap","net_bind_service","net_raw","sys_chroot","mknod","audit_write","setfcap"],"permitted":null,"effective":null,"bounding":["chown","dac_override","fowner","fsetid","kill","setgid","setuid","setpcap","net_bind_service","net_raw","sys_chroot","mknod","audit_write","setfcap"],"ambient":null}, "cwd": "/usr/share/filebeat", "exe": "/usr/share/filebeat/filebeat", "name": "filebeat", "pid": 1, "ppid": 0, "seccomp": {"mode":"filter","no_new_privs":false}, "start_time": "2022-02-15T07:14:55.700Z"}}}

2022-02-15T07:14:56.822Z INFO instance/beat.go:297 Setup Beat: filebeat; Version: 7.7.1

......

2022-02-15T07:17:22.606Z INFO instance/beat.go:791 Kibana dashboards successfully loaded.

2022-02-15T07:17:22.606Z INFO instance/beat.go:438 filebeat start running.

2022-02-15T07:17:22.606Z INFO registrar/registrar.go:145 Loading registrar data from /usr/share/filebeat/data/registry/filebeat/data.json

2022-02-15T07:17:22.607Z INFO registrar/registrar.go:152 States Loaded from registrar: 0

2022-02-15T07:17:22.607Z INFO beater/crawler.go:73 Loading Inputs: 1

2022-02-15T07:17:22.607Z INFO log/input.go:152 Configured paths: [/usr/share/filebeat/logs/*.log /usr/share/filebeat/logs/SyncWorkUserProcess/*.log /usr/share/filebeat/logs/SyncWorkUserCrontab/*.log /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/*.log /usr/share/filebeat/logs/SyncUserDayBalanceProcess/*.log /usr/share/filebeat/logs/CardPay/*.log]

2022-02-15T07:17:22.607Z INFO input/input.go:114 Starting input of type: log; ID: 10770555606981107452

2022-02-15T07:17:22.607Z INFO beater/crawler.go:105 Loading and starting Inputs completed. Enabled inputs: 1

2022-02-15T07:17:22.608Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-13.log

2022-02-15T07:17:22.608Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-14.log

2022-02-15T07:17:22.608Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-15.log

2022-02-15T07:17:22.608Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-13.log

2022-02-15T07:17:22.611Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-12.log

2022-02-15T07:17:22.614Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-14.log

2022-02-15T07:17:22.616Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-10.log

2022-02-15T07:17:22.619Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-15.log

2022-02-15T07:17:22.621Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-09.log

2022-02-15T07:17:22.625Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-09.log

2022-02-15T07:17:22.627Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-08.log

2022-02-15T07:17:22.629Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-14.log

2022-02-15T07:17:22.632Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-10.log

2022-02-15T07:17:22.634Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-10.log

2022-02-15T07:17:22.637Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-11.log

2022-02-15T07:17:22.639Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-14.log

2022-02-15T07:17:22.643Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-15.log

2022-02-15T07:17:22.646Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-09.log

2022-02-15T07:17:22.648Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-09.log

2022-02-15T07:17:22.651Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-12.log

2022-02-15T07:17:22.654Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-11.log

2022-02-15T07:17:22.657Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-10.log

2022-02-15T07:17:22.660Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-11.log

2022-02-15T07:17:22.662Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserCrontab/2022-02-12.log

2022-02-15T07:17:22.664Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-09.log

2022-02-15T07:17:22.667Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/swoole.log

2022-02-15T07:17:22.669Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-12.log

2022-02-15T07:17:22.672Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-10.log

2022-02-15T07:17:22.674Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/log_202202.log

2022-02-15T07:17:22.677Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-11.log

2022-02-15T07:17:22.680Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceProcess/2022-02-14.log

2022-02-15T07:17:22.683Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-13.log

2022-02-15T07:17:22.685Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-12.log

2022-02-15T07:17:22.688Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-11.log

2022-02-15T07:17:22.690Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncUserDayBalanceCrontab/2022-02-13.log

2022-02-15T07:17:22.693Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/CardPay/2022-02-13.log

2022-02-15T07:17:22.695Z INFO log/harvester.go:297 Harvester started for file: /usr/share/filebeat/logs/SyncWorkUserProcess/2022-02-08.log

2022-02-15T07:17:28.609Z INFO [publisher_pipeline_output] pipeline/output.go:101 Connecting to backoff(elasticsearch(http://172.28.191.155:9200))

2022-02-15T07:17:28.611Z INFO [esclientleg] eslegclient/connection.go:263 Attempting to connect to Elasticsearch version 7.8.1

2022-02-15T07:17:28.624Z INFO [license] licenser/es_callback.go:51 Elasticsearch license: Basic

2022-02-15T07:17:28.625Z INFO [esclientleg] eslegclient/connection.go:263 Attempting to connect to Elasticsearch version 7.8.1

2022-02-15T07:17:28.642Z INFO [index-management] idxmgmt/std.go:258 Auto ILM enable success.

2022-02-15T07:17:28.668Z INFO [index-management] idxmgmt/std.go:271 ILM policy successfully loaded.

2022-02-15T07:17:28.668Z INFO [index-management] idxmgmt/std.go:410 Set setup.template.name to '{filebeat-7.7.1 {now/d}-000001}' as ILM is enabled.

2022-02-15T07:17:28.668Z INFO [index-management] idxmgmt/std.go:415 Set setup.template.pattern to 'filebeat-7.7.1-*' as ILM is enabled.

2022-02-15T07:17:28.668Z INFO [index-management] idxmgmt/std.go:449 Set settings.index.lifecycle.rollover_alias in template to {filebeat-7.7.1 {now/d}-000001} as ILM is enabled.

2022-02-15T07:17:28.668Z INFO [index-management] idxmgmt/std.go:453 Set settings.index.lifecycle.name in template to {filebeat {"policy":{"phases":{"hot":{"actions":{"rollover":{"max_age":"30d","max_size":"50gb"}}}}}}} as ILM is enabled.

2022-02-15T07:17:28.669Z INFO template/load.go:169 Existing template will be overwritten, as overwrite is enabled.

2022-02-15T07:17:28.800Z INFO template/load.go:109 Try loading template filebeat-7.7.1 to Elasticsearch

2022-02-15T07:17:28.900Z INFO template/load.go:101 template with name 'filebeat-7.7.1' loaded.

2022-02-15T07:17:28.900Z INFO [index-management] idxmgmt/std.go:295 Loaded index template.

2022-02-15T07:17:29.083Z INFO [index-management] idxmgmt/std.go:306 Write alias successfully generated.

2022-02-15T07:17:29.083Z INFO [publisher_pipeline_output] pipeline/output.go:111 Connection to backoff(elasticsearch(http://172.28.191.155:9200)) established

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

可以看到filebeat已经成功启动了,如果启动失败的话可以看filebeat的配置文件es和kibana的host是否正确。

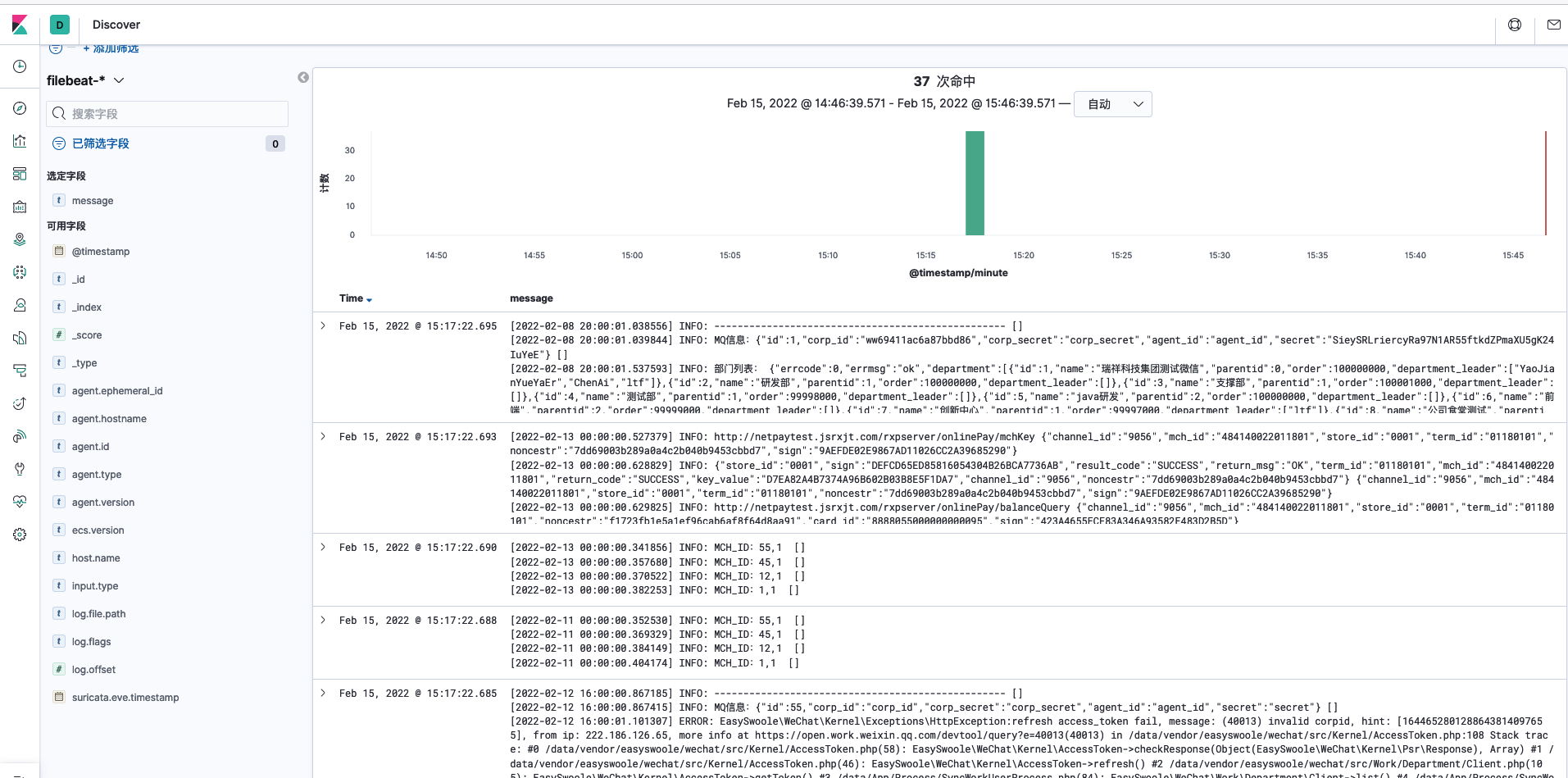

在收集日志的目录下面添加日志文件,或者更新日志,然后去kibana查看是否有filebeat的索引生成。