redis-shake是阿里云Redis&MongoDB团队开源的用于redis数据同步的工具。下载地址:这里 (opens new window)。

# 1.基本功能

redis-shake是我们基于redis-port (opens new window)基础上进行改进的一款产品。它支持解析、恢复、备份、同步四个功能。以下主要介绍同步sync。

- 恢复restore:将RDB文件恢复到目的redis数据库。

- 备份dump:将源redis的全量数据通过RDB文件备份起来。

- 解析decode:对RDB文件进行读取,并以json格式解析存储。

- 同步sync:支持源redis和目的redis的数据同步,支持全量和增量数据的迁移,支持从云下到阿里云云上的同步,也支持云下到云下不同环境的同步,支持单节点、主从版、集群版之间的互相同步。需要注意的是,如果源端是集群版,可以启动一个RedisShake,从不同的db结点进行拉取,同时源端不能开启move slot功能;对于目的端,如果是集群版,写入可以是1个或者多个db结点。

- 同步rump:支持源redis和目的redis的数据同步,仅支持全量的迁移。采用scan和restore命令进行迁移,支持不同云厂商不同redis版本的迁移。

# 2.基本原理

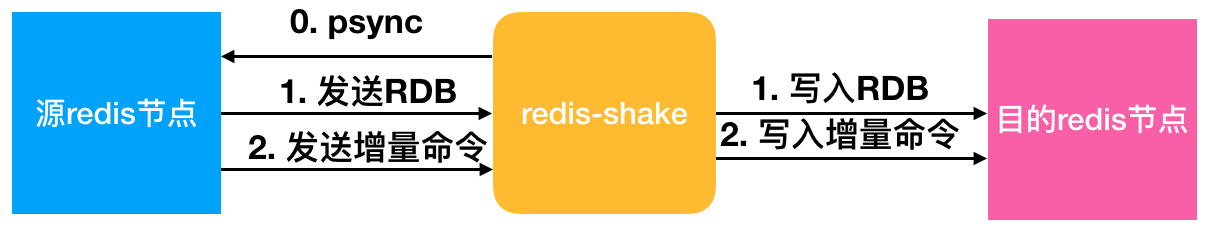

redis-shake的基本原理就是模拟一个从节点加入源redis集群,首先进行全量拉取并回放,然后进行增量的拉取(通过psync命令)。如下图所示:

如果源端是集群模式,只需要启动一个redis-shake进行拉取,同时不能开启源端的move slot操作。如果目的端是集群模式,可以写入到一个节点,然后再进行slot的迁移,当然也可以多对多写入。

目前,redis-shake到目的端采用单链路实现,对于正常情况下,这不会成为瓶颈,但对于极端情况,qps比较大的时候,此部分性能可能成为瓶颈,后续我们可能会计划对此进行优化。另外,redis-shake到目的端的数据同步采用异步的方式,读写分离在2个线程操作,降低因为网络时延带来的同步性能下降。

# 3.高效性

全量同步阶段并发执行,增量同步阶段异步执行,能够达到毫秒级别延迟(取决于网络延迟)。同时,我们还对大key同步进行分批拉取,优化同步性能。

# 4.监控

用户可以通过我们提供的restful拉取metric来对redis-shake进行实时监控:curl 127.0.0.1:9320/metric。

# 5.校验

如何校验同步的正确性?可以采用我们开源的redis-full-check (opens new window),具体原理可以参考这篇博客 (opens new window)。

# 6.支持

- 支持2.8-5.0版本的同步。

- 支持codis。

- 支持云下到云上,云上到云上,云上到云下(阿里云目前支持主从版),其他云到阿里云等链路,帮助用户灵活构建混合云场景。

# 7.开发中的功能

断点续传。支持断开后按offset恢复,降低因主备切换、网络抖动造成链路断开重新同步拉取全量的性能影响。

# 8.说明

多活支持的支持,需要依赖内核的能力,目前单单依赖通道层面无法解决该问题。redis内核层面需要提供gtid的概念,以及CRDT的解决冲突的方式才可以。

# 9.参数解释

启动示例(以sync举例):

vinllen@ ~/redis-shake/bin$ ./redis-shake -type=sync -conf=../conf/redis-shake.conf

conf路径下的redis-shake.conf存放的是配置文件,其内容及部分中文注释如下

# this is the configuration of redis-shake.

# id

id = redis-shake

# log file,日志文件,不配置将打印到stdout

log_file =

# pprof port

system_profile = 9310

# restful port,查看metric端口

http_profile = 9320

# runtime.GOMAXPROCS, 0 means use cpu core number: runtime.NumCPU()

ncpu = 0

# parallel routines number used in RDB file syncing.

parallel = 4

# input RDB file. read from stdin, default is stdin ('/dev/stdin').

# used in `decode` and `restore`.

# 如果是decode或者restore,这个参数表示读取的rdb文件

input_rdb = local_dump

# output RDB file. default is stdout ('/dev/stdout').

# used in `decode` and `dump`.

# 如果是decode或者dump,这个参数表示输出的rdb

output_rdb = local_dump

# source redis configuration.

# used in `dump` and `sync`.

# ip:port

# 源redis地址

source.address = 127.0.0.1:20441

# password.

source.password_raw = kLNIl691OZctWST

# auth type, don't modify it

source.auth_type = auth

# version number, default is 6 (6 for Redis Version <= 3.0.7, 7 for >=3.2.0)

source.version = 6

# target redis configuration. used in `restore` and `sync`.

# used in `restore` and `sync`.

# ip:port

# 目的redis地址

target.address = 10.101.72.137:20551

# password.

target.password_raw = kLNIl691OZctWST

# auth type, don't modify it

target.auth_type = auth

# version number, default is 6 (6 for Redis Version <= 3.0.7, 7 for >=3.2.0)

target.version = 6

# all the data will come into this db. < 0 means disable.

# used in `restore` and `sync`.

target.db = -1

# use for expire key, set the time gap when source and target timestamp are not the same.

# 用于处理过期的键值,当迁移两端不一致的时候,目的端需要加上这个值

fake_time =

# force rewrite when destination restore has the key

# used in `restore` and `sync`.

# 当源目的有重复key,是否进行覆写

rewrite = true

# filter db or key or slot

# choose these db, e.g., 5, only choose db5. defalut is all.

# used in `restore` and `sync`.

# 支持过滤db,只让指定的db通过

filter.db =

# filter key with prefix string. multiple keys are separated by ';'.

# e.g., a;b;c

# default is all.

# used in `restore` and `sync`.

# 支持过滤key,只让指定的key通过,分号分隔

filter.key =

# filter given slot, multiple slots are separated by ';'.

# e.g., 1;2;3

# used in `sync`.

# 指定过滤slot,只让指定的slot通过

filter.slot =

# big key threshold, the default is 500 * 1024 * 1024. The field of the big key will be split in processing.

# 我们对大key有特殊的处理,此处需要指定大key的阈值

big_key_threshold = 524288000

# use psync command.

# used in `sync`.

# 默认使用sync命令,启用将会使用psync命令

psync = false

# enable metric

# used in `sync`.

# 是否启用metric

metric = true

# print in log

# 是否将metric打印到log中

metric.print_log = true

# heartbeat

# send heartbeat to this url

# used in `sync`.

# 心跳的url地址,redis-shake将会发送到这个地址

heartbeat.url = http://127.0.0.1:8000

# interval by seconds

# 心跳保活周期

heartbeat.interval = 3

# external info which will be included in heartbeat data.

# 在心跳报文中添加额外的信息

heartbeat.external = test external

# local network card to get ip address, e.g., "lo", "eth0", "en0"

# 获取ip的网卡

heartbeat.network_interface =

# sender information.

# sender flush buffer size of byte.

# used in `sync`.

# 发送缓存的字节长度,超过这个阈值将会强行刷缓存发送

sender.size = 104857600

# sender flush buffer size of oplog number.

# used in `sync`.

# 发送缓存的报文个数,超过这个阈值将会强行刷缓存发送

sender.count = 5000

# delay channel size. once one oplog is sent to target redis, the oplog id and timestamp will also stored in this delay queue. this timestamp will be used to calculate the time delay when receiving ack from target redis.

# used in `sync`.

# 用于metric统计时延的队列

sender.delay_channel_size = 65535

# ----------------splitter----------------

# below variables are useless for current opensource version so don't set.

# replace hash tag.

# used in `sync`.

replace_hash_tag = false

# used in `restore` and `dump`.

extra = false

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

# 10.实战

# 10.1 配置文件

# This file is the configuration of redis-shake.

# If you have any problem, please visit: https://github.com/alibaba/RedisShake/wiki/FAQ

# 有疑问请先查阅:https://github.com/alibaba/RedisShake/wiki/FAQ

# current configuration version, do not modify.

# 当前配置文件的版本号,请不要修改该值。

conf.version = 1

# id

id = redis-shake

# The log file name, if left blank, it will be printed to stdout,

# otherwise it will be printed to the specified file.

# 日志文件名,留空则会打印到 stdout,否则打印到指定文件。

# for example:

# log.file =

# log.file = /var/log/redis-shake.log

log.file =

# log level: "none", "error", "warn", "info", "debug".

# default is "info".

# 日志等级,可选:none error warn info debug

# 默认为:info

log.level = info

# 进程文件存储目录,留空则会输出到当前目录,

# 注意这个是目录,真正生成的 pid 是 {pid_path}/{id}.pid

# 例如:

# pid_path = ./

# pid_path = /var/run/

pid_path =

# pprof port.

system_profile = 9310

# restful port, set -1 means disable, in `restore` mode RedisShake will exit once finish restoring RDB only if this value

# is -1, otherwise, it'll wait forever.

# restful port, 查看 metric 端口, -1 表示不启用. 如果是`restore`模式,只有设置为-1才会在完成RDB恢复后退出,否则会一直block。

# http://127.0.0.1:9320/conf 查看 redis-shake 使用的配置

# http://127.0.0.1:9320/metric 查看 redis-shake 的同步情况

http_profile = 9320

# parallel routines number used in RDB file syncing. default is 64.

# 启动多少个并发线程同步一个RDB文件。

parallel = 32

# source redis configuration.

# used in `dump`, `sync` and `rump`.

# source redis type, e.g. "standalone" (default), "sentinel" or "cluster".

# 1. "standalone": standalone db mode.

# 2. "sentinel": the redis address is read from sentinel.

# 3. "cluster": the source redis has several db.

# 4. "proxy": the proxy address, currently, only used in "rump" mode.

# used in `dump`, `sync` and `rump`.

# 源端 Redis 的类型,可选:standalone sentinel cluster proxy

# 注意:proxy 只用于 rump 模式。

source.type = standalone

# ip:port

# the source address can be the following:

# 1. single db address. for "standalone" type.

# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type. e.g., 10.1.1.1:20331;10.1.1.2:20441.

# 4. proxy address(used in "rump" mode only). for "proxy" type.

# 源redis地址。对于sentinel或者开源cluster模式,输入格式为"master名字:拉取角色为master或者slave@sentinel的地址",别的cluster

# 架构,比如codis, twemproxy, aliyun proxy等需要配置所有master或者slave的db地址。

# 源端 redis 的地址

# 1. standalone 模式配置 ip:port, 例如: 10.1.1.1:20331

# 2. cluster 模式需要配置所有 nodes 的 ip:port, 例如: source.address = 10.1.1.1:20331;10.1.1.2:20441

source.address = 127.0.0.1:6379

# source password, left blank means no password.

# 源端密码,留空表示无密码。

source.password_raw =

# auth type, don't modify it

source.auth_type = auth

# tls enable, true or false. Currently, only support standalone.

# open source redis does NOT support tls so far, but some cloud versions do.

source.tls_enable = false

# input RDB file.

# used in `decode` and `restore`.

# if the input is list split by semicolon(;), redis-shake will restore the list one by one.

# 如果是decode或者restore,这个参数表示读取的rdb文件。支持输入列表,例如:rdb.0;rdb.1;rdb.2

# redis-shake将会挨个进行恢复。

source.rdb.input =

# the concurrence of RDB syncing, default is len(source.address) or len(source.rdb.input).

# used in `dump`, `sync` and `restore`. 0 means default.

# This is useless when source.type isn't cluster or only input is only one RDB.

# 拉取的并发度,如果是`dump`或者`sync`,默认是source.address中db的个数,`restore`模式默认len(source.rdb.input)。

# 假如db节点/输入的rdb有5个,但rdb.parallel=3,那么一次只会

# 并发拉取3个db的全量数据,直到某个db的rdb拉取完毕并进入增量,才会拉取第4个db节点的rdb,

# 以此类推,最后会有len(source.address)或者len(rdb.input)个增量线程同时存在。

source.rdb.parallel = 0

# for special cloud vendor: ucloud

# used in `decode` and `restore`.

# ucloud集群版的rdb文件添加了slot前缀,进行特判剥离: ucloud_cluster。

source.rdb.special_cloud =

# target redis configuration. used in `restore`, `sync` and `rump`.

# the type of target redis can be "standalone", "proxy" or "cluster".

# 1. "standalone": standalone db mode.

# 2. "sentinel": the redis address is read from sentinel.

# 3. "cluster": open source cluster (not supported currently).

# 4. "proxy": proxy layer ahead redis. Data will be inserted in a round-robin way if more than 1 proxy given.

# 目的redis的类型,支持standalone,sentinel,cluster和proxy四种模式。

target.type = standalone

# ip:port

# the target address can be the following:

# 1. single db address. for "standalone" type.

# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type.

# 4. proxy address. for "proxy" type.

target.address = 127.0.0.1:6378

# target password, left blank means no password.

# 目的端密码,留空表示无密码。

target.password_raw =

# auth type, don't modify it

target.auth_type = auth

# all the data will be written into this db. < 0 means disable.

target.db = -1

# Format: 0-5;1-3 ,Indicates that the data of the source db0 is written to the target db5, and

# the data of the source db1 is all written to the target db3.

# Note: When target.db is specified, target.dbmap will not take effect.

# 例如 0-5;1-3 表示源端 db0 的数据会被写入目的端 db5, 源端 db1 的数据会被写入目的端 db3

# 当 target.db 开启的时候 target.dbmap 不会生效.

target.dbmap =

# tls enable, true or false. Currently, only support standalone.

# open source redis does NOT support tls so far, but some cloud versions do.

target.tls_enable = false

# output RDB file prefix.

# used in `decode` and `dump`.

# 如果是decode或者dump,这个参数表示输出的rdb前缀,比如输入有3个db,那么dump分别是:

# ${output_rdb}.0, ${output_rdb}.1, ${output_rdb}.2

target.rdb.output = local_dump

# some redis proxy like twemproxy doesn't support to fetch version, so please set it here.

# e.g., target.version = 4.0

target.version =

# use for expire key, set the time gap when source and target timestamp are not the same.

# 用于处理过期的键值,当迁移两端不一致的时候,目的端需要加上这个值

fake_time =

# how to solve when destination restore has the same key.

# rewrite: overwrite.

# none: panic directly.

# ignore: skip this key. not used in rump mode.

# used in `restore`, `sync` and `rump`.

# 当源目的有重复 key 时是否进行覆写, 可选值:

# 1. rewrite: 源端覆盖目的端

# 2. none: 一旦发生进程直接退出

# 3. ignore: 保留目的端key,忽略源端的同步 key. 该值在 rump 模式下不会生效.

key_exists = rewrite

# filter db, key, slot, lua.

# filter db.

# used in `restore`, `sync` and `rump`.

# e.g., "0;5;10" means match db0, db5 and db10.

# at most one of `filter.db.whitelist` and `filter.db.blacklist` parameters can be given.

# if the filter.db.whitelist is not empty, the given db list will be passed while others filtered.

# if the filter.db.blacklist is not empty, the given db list will be filtered while others passed.

# all dbs will be passed if no condition given.

# 指定的db被通过,比如0;5;10将会使db0, db5, db10通过, 其他的被过滤

filter.db.whitelist =

# 指定的db被过滤,比如0;5;10将会使db0, db5, db10过滤,其他的被通过

filter.db.blacklist =

# filter key with prefix string. multiple keys are separated by ';'.

# e.g., "abc;bzz" match let "abc", "abc1", "abcxxx", "bzz" and "bzzwww".

# used in `restore`, `sync` and `rump`.

# at most one of `filter.key.whitelist` and `filter.key.blacklist` parameters can be given.

# if the filter.key.whitelist is not empty, the given keys will be passed while others filtered.

# if the filter.key.blacklist is not empty, the given keys will be filtered while others passed.

# all the namespace will be passed if no condition given.

# 支持按前缀过滤key,只让指定前缀的key通过,分号分隔。比如指定abc,将会通过abc, abc1, abcxxx

filter.key.whitelist =

# 支持按前缀过滤key,不让指定前缀的key通过,分号分隔。比如指定abc,将会阻塞abc, abc1, abcxxx

filter.key.blacklist =

# filter given slot, multiple slots are separated by ';'.

# e.g., 1;2;3

# used in `sync`.

# 指定过滤slot,只让指定的slot通过

filter.slot =

# filter lua script. true means not pass. However, in redis 5.0, the lua

# converts to transaction(multi+{commands}+exec) which will be passed.

# 控制不让lua脚本通过,true表示不通过

filter.lua = false

# big key threshold, the default is 500 * 1024 * 1024 bytes. If the value is bigger than

# this given value, all the field will be spilt and write into the target in order. If

# the target Redis type is Codis, this should be set to 1, please checkout FAQ to find

# the reason.

# 正常key如果不大,那么都是直接调用restore写入到目的端,如果key对应的value字节超过了给定

# 的值,那么会分批依次一个一个写入。如果目的端是Codis,这个需要置为1,具体原因请查看FAQ。

# 如果目的端大版本小于源端,也建议设置为1。

big_key_threshold = 524288000

# enable metric

# used in `sync`.

# 是否启用metric

metric = true

# print in log

# 是否将metric打印到log中

metric.print_log = false

# sender information.

# sender flush buffer size of byte.

# used in `sync`.

# 发送缓存的字节长度,超过这个阈值将会强行刷缓存发送

sender.size = 104857600

# sender flush buffer size of oplog number.

# used in `sync`. flush sender buffer when bigger than this threshold.

# 发送缓存的报文个数,超过这个阈值将会强行刷缓存发送,对于目的端是cluster的情况,这个值

# 的调大将会占用部分内存。

sender.count = 4095

# delay channel size. once one oplog is sent to target redis, the oplog id and timestamp will also

# stored in this delay queue. this timestamp will be used to calculate the time delay when receiving

# ack from target redis.

# used in `sync`.

# 用于metric统计时延的队列

sender.delay_channel_size = 65535

# enable keep_alive option in TCP when connecting redis.

# the unit is second.

# 0 means disable.

# TCP keep-alive保活参数,单位秒,0表示不启用。

keep_alive = 0

# used in `rump`.

# number of keys captured each time. default is 100.

# 每次scan的个数,不配置则默认100.

scan.key_number = 50

# used in `rump`.

# we support some special redis types that don't use default `scan` command like alibaba cloud and tencent cloud.

# 有些版本具有特殊的格式,与普通的scan命令有所不同,我们进行了特殊的适配。目前支持腾讯云的集群版"tencent_cluster"

# 和阿里云的集群版"aliyun_cluster",注释主从版不需要配置,只针对集群版。

scan.special_cloud =

# used in `rump`.

# we support to fetching data from given file which marks the key list.

# 有些云版本,既不支持sync/psync,也不支持scan,我们支持从文件中进行读取所有key列表并进行抓取:一行一个key。

scan.key_file =

# limit the rate of transmission. Only used in `rump` currently.

# e.g., qps = 1000 means pass 1000 keys per second. default is 500,000(0 means default)

qps = 200000

# enable resume from break point, please visit xxx to see more details.

# 断点续传开关

resume_from_break_point = false

# ----------------splitter----------------

# below variables are useless for current open source version so don't set.

# replace hash tag.

# used in `sync`.

replace_hash_tag = false

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

###执行脚本

redis|⇒ ./redis-shake.darwin -type=sync -conf=redis-shake.conf

2022/02/07 15:00:02 [WARN] source.auth_type[auth] != auth

2022/02/07 15:00:02 [WARN] target.auth_type[auth] != auth

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2022/02/07 15:00:02 [INFO] source rdb[127.0.0.1:6379] checksum[yes]

2022/02/07 15:00:02 [WARN]

______________________________

\ \ _ ______ |

\ \ / \___-=O'/|O'/__|

\ RedisShake, here we go !! \_______\ / | / )

/ / '/-==__ _/__|/__=-| -GM

/ Alibaba Cloud / * \ | |

/ / (o)

------------------------------

if you have any problem, please visit https://github.com/alibaba/RedisShake/wiki/FAQ

2022/02/07 15:00:02 [INFO] redis-shake configuration: {"ConfVersion":1,"Id":"redis-shake","LogFile":"","LogLevel":"info","SystemProfile":9310,"HttpProfile":9320,"Parallel":32,"SourceType":"standalone","SourceAddress":"127.0.0.1:6379","SourcePasswordRaw":"***","SourcePasswordEncoding":"***","SourceAuthType":"auth","SourceTLSEnable":false,"SourceRdbInput":[],"SourceRdbParallel":1,"SourceRdbSpecialCloud":"","TargetAddress":"127.0.0.1:6378","TargetPasswordRaw":"***","TargetPasswordEncoding":"***","TargetDBString":"-1","TargetDBMapString":"","TargetAuthType":"auth","TargetType":"standalone","TargetTLSEnable":false,"TargetRdbOutput":"local_dump","TargetVersion":"6.2.6","FakeTime":"","KeyExists":"rewrite","FilterDBWhitelist":[],"FilterDBBlacklist":[],"FilterKeyWhitelist":[],"FilterKeyBlacklist":[],"FilterSlot":[],"FilterLua":false,"BigKeyThreshold":524288000,"Metric":true,"MetricPrintLog":false,"SenderSize":104857600,"SenderCount":4095,"SenderDelayChannelSize":65535,"KeepAlive":0,"PidPath":"","ScanKeyNumber":50,"ScanSpecialCloud":"","ScanKeyFile":"","Qps":200000,"ResumeFromBreakPoint":false,"Psync":true,"NCpu":0,"HeartbeatUrl":"","HeartbeatInterval":10,"HeartbeatExternal":"","HeartbeatNetworkInterface":"","ReplaceHashTag":false,"ExtraInfo":false,"SockFileName":"","SockFileSize":0,"FilterKey":null,"FilterDB":"","Rewrite":false,"SourceAddressList":["127.0.0.1:6379"],"TargetAddressList":["127.0.0.1:6378"],"SourceVersion":"6.2.6","HeartbeatIp":"127.0.0.1","ShiftTime":0,"TargetReplace":false,"TargetDB":-1,"Version":"develop,cc226f841e2e244c48246ebfcfd5a50396b59710,go1.15.7,2021-09-03_10:06:55","Type":"sync","TargetDBMap":null}

2022/02/07 15:00:02 [INFO] DbSyncer[0] starts syncing data from 127.0.0.1:6379 to [127.0.0.1:6378] with http[9321], enableResumeFromBreakPoint[false], slot boundary[-1, -1]

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2022/02/07 15:00:02 [INFO] DbSyncer[0] psync connect '127.0.0.1:6379' with auth type[auth] OK!

2022/02/07 15:00:02 [INFO] DbSyncer[0] psync send listening port[9320] OK!

2022/02/07 15:00:02 [INFO] DbSyncer[0] try to send 'psync' command: run-id[?], offset[-1]

2022/02/07 15:00:02 [INFO] Event:FullSyncStart Id:redis-shake

2022/02/07 15:00:02 [INFO] DbSyncer[0] psync runid = 1b999e095bc535328ad09d44c9d579f436289161, offset = 0, fullsync

2022/02/07 15:00:02 [INFO] DbSyncer[0] rdb file size = 8705

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:02 [INFO] Aux information key:redis-ver value:6.2.6

2022/02/07 15:00:02 [INFO] Aux information key:redis-bits value:64

2022/02/07 15:00:02 [INFO] Aux information key:ctime value:1644217202

2022/02/07 15:00:02 [INFO] Aux information key:used-mem value:1942520

2022/02/07 15:00:02 [INFO] Aux information key:repl-stream-db value:0

2022/02/07 15:00:02 [INFO] Aux information key:repl-id value:1b999e095bc535328ad09d44c9d579f436289161

2022/02/07 15:00:02 [INFO] Aux information key:repl-offset value:0

2022/02/07 15:00:02 [INFO] Aux information key:aof-preamble value:0

2022/02/07 15:00:02 [INFO] db_size:12 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:1 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:4 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:1 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:4 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:1 expire_size:0

2022/02/07 15:00:02 [INFO] db_size:9 expire_size:8

2022/02/07 15:00:02 [INFO] db_size:8 expire_size:0

2022/02/07 15:00:03 [INFO] DbSyncer[0] total = 8.50KB - 8.50KB [100%] entry=40

2022/02/07 15:00:03 [INFO] DbSyncer[0] sync rdb done

2022/02/07 15:00:03 [INFO] input password is empty, skip auth address[127.0.0.1:6378] with type[auth].

2022/02/07 15:00:03 [INFO] DbSyncer[0] FlushEvent:IncrSyncStart Id:redis-shake

2022/02/07 15:00:03 [INFO] input password is empty, skip auth address[127.0.0.1:6379] with type[auth].

2022/02/07 15:00:04 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:05 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:06 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:07 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:08 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:09 [INFO] DbSyncer[0] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

2022/02/07 15:00:10 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:11 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:12 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:13 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:14 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:15 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:16 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:17 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:18 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:19 [INFO] DbSyncer[0] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

2022/02/07 15:00:20 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:21 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:22 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:23 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:24 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2022/02/07 15:00:25 [INFO] DbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

# 10.2 验证数据

https://github.com/alibaba/RedisFullCheck

[root@62919ff17f79 redis-full-check-1.4.8]# ./redis-full-check -s 10.7.3.40:6379 -t 10.7.3.40:6378

[INFO 2022-02-07-07:02:52 main.go:65]: init log success

[INFO 2022-02-07-07:02:52 main.go:168]: configuration: {10.7.3.40:6379 auth 0 -1 10.7.3.40:6378 auth 0 -1 result.db 3 2 unknown unknown unknown 15000 5 256 5 false 16384 20445 false}

[INFO 2022-02-07-07:02:52 main.go:170]: ---------

[INFO 2022-02-07-07:02:52 full_check.go:238]: sourceDbType=0, p.sourcePhysicalDBList=[meaningless]

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=3:keys=4

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=4:keys=1

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=10:keys=4

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=11:keys=1

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=14:keys=9

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=15:keys=8

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=0:keys=12

[INFO 2022-02-07-07:02:52 full_check.go:243]: db=1:keys=1

[INFO 2022-02-07-07:02:52 full_check.go:253]: ---------------- start 1th time compare

[INFO 2022-02-07-07:02:52 full_check.go:278]: start compare db 14

[INFO 2022-02-07-07:02:52 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:02:53 full_check.go:203]: stat:

times:1, db:14, dbkeys:9, finish:33%, finished:true

KeyScan:{9 9 0}

[INFO 2022-02-07-07:02:53 full_check.go:278]: start compare db 15

[INFO 2022-02-07-07:02:53 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:02:55 full_check.go:203]: stat:

times:1, db:15, dbkeys:8, finish:33%, finished:true

KeyScan:{8 8 0}

[INFO 2022-02-07-07:02:55 full_check.go:278]: start compare db 0

[INFO 2022-02-07-07:02:55 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:02:57 full_check.go:203]: stat:

times:1, db:0, dbkeys:12, finish:33%, finished:true

KeyScan:{12 12 0}

[INFO 2022-02-07-07:02:57 full_check.go:278]: start compare db 1

[INFO 2022-02-07-07:02:57 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:02:58 full_check.go:203]: stat:

times:1, db:1, dbkeys:1, finish:33%, finished:true

KeyScan:{1 1 0}

[INFO 2022-02-07-07:02:58 full_check.go:278]: start compare db 3

[INFO 2022-02-07-07:02:58 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:02:59 full_check.go:203]: stat:

times:1, db:3, dbkeys:4, finish:33%, finished:true

KeyScan:{4 4 0}

[INFO 2022-02-07-07:02:59 full_check.go:278]: start compare db 4

[INFO 2022-02-07-07:02:59 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:03:00 full_check.go:203]: stat:

times:1, db:4, dbkeys:1, finish:33%, finished:true

KeyScan:{1 1 0}

[INFO 2022-02-07-07:03:00 full_check.go:278]: start compare db 10

[INFO 2022-02-07-07:03:00 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:03:01 full_check.go:203]: stat:

times:1, db:10, dbkeys:4, finish:33%, finished:true

KeyScan:{4 4 0}

[INFO 2022-02-07-07:03:01 full_check.go:278]: start compare db 11

[INFO 2022-02-07-07:03:01 scan.go:20]: build connection[source redis addr: [10.7.3.40:6379]]

[INFO 2022-02-07-07:03:03 full_check.go:203]: stat:

times:1, db:11, dbkeys:1, finish:33%, finished:false

KeyScan:{1 0 1}

[INFO 2022-02-07-07:03:03 full_check.go:203]: stat:

times:1, db:11, dbkeys:1, finish:33%, finished:true

KeyScan:{1 0 1}

[INFO 2022-02-07-07:03:03 full_check.go:250]: wait 5 seconds before start

[INFO 2022-02-07-07:03:08 full_check.go:253]: ---------------- start 2th time compare

[INFO 2022-02-07-07:03:08 full_check.go:278]: start compare db 0

[INFO 2022-02-07-07:03:08 full_check.go:203]: stat:

times:2, db:0, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:08 full_check.go:278]: start compare db 1

[INFO 2022-02-07-07:03:08 full_check.go:203]: stat:

times:2, db:1, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:08 full_check.go:278]: start compare db 3

[INFO 2022-02-07-07:03:08 full_check.go:203]: stat:

times:2, db:3, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:08 full_check.go:278]: start compare db 4

[INFO 2022-02-07-07:03:09 full_check.go:203]: stat:

times:2, db:4, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:09 full_check.go:278]: start compare db 10

[INFO 2022-02-07-07:03:09 full_check.go:203]: stat:

times:2, db:10, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:09 full_check.go:278]: start compare db 11

[INFO 2022-02-07-07:03:09 full_check.go:203]: stat:

times:2, db:11, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:09 full_check.go:278]: start compare db 14

[INFO 2022-02-07-07:03:09 full_check.go:203]: stat:

times:2, db:14, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:09 full_check.go:278]: start compare db 15

[INFO 2022-02-07-07:03:09 full_check.go:203]: stat:

times:2, db:15, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:09 full_check.go:250]: wait 5 seconds before start

[INFO 2022-02-07-07:03:15 full_check.go:253]: ---------------- start 3th time compare

[INFO 2022-02-07-07:03:15 full_check.go:278]: start compare db 3

[INFO 2022-02-07-07:03:15 full_check.go:203]: stat:

times:3, db:3, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:15 full_check.go:278]: start compare db 4

[INFO 2022-02-07-07:03:15 full_check.go:203]: stat:

times:3, db:4, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:15 full_check.go:278]: start compare db 10

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:10, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:278]: start compare db 11

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:11, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:278]: start compare db 14

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:14, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:278]: start compare db 15

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:15, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:278]: start compare db 0

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:0, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:278]: start compare db 1

[INFO 2022-02-07-07:03:16 full_check.go:203]: stat:

times:3, db:1, finished:true

KeyScan:{0 0 0}

[INFO 2022-02-07-07:03:16 full_check.go:328]: --------------- finished! ----------------

all finish successfully, totally 0 key(s) and 0 field(s) conflict

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

# 来源地址

← 限流 MySQL数据快速同步到Redis →